As drone technology evolves, the quality of camera footage and recording from drones have improved as well. Today we can get high resolution images from just about any commercial drone.

This has pushed all vertical domain owners who invested heavily into drone operations, such as facilities management and security, to further process and utilize their drone aerial footage.

Specifically, most users want the ability to search for their “needle in the haystack” – automatically finding trespassers, identifying illegally parked cars and detecting hairline cracks on buildings and more.

While it is hard to beat a human’s natural ability at object detection and recognition, computer vision wins out in endurance and consistency. Computer vision can help quickly analyze hours of drone footage automatically and generate business insights that gives you an upper hand over your competitors.

Let’s take a look at some of our work in the past year.

- Agriculture

Case Study: Tree Counting

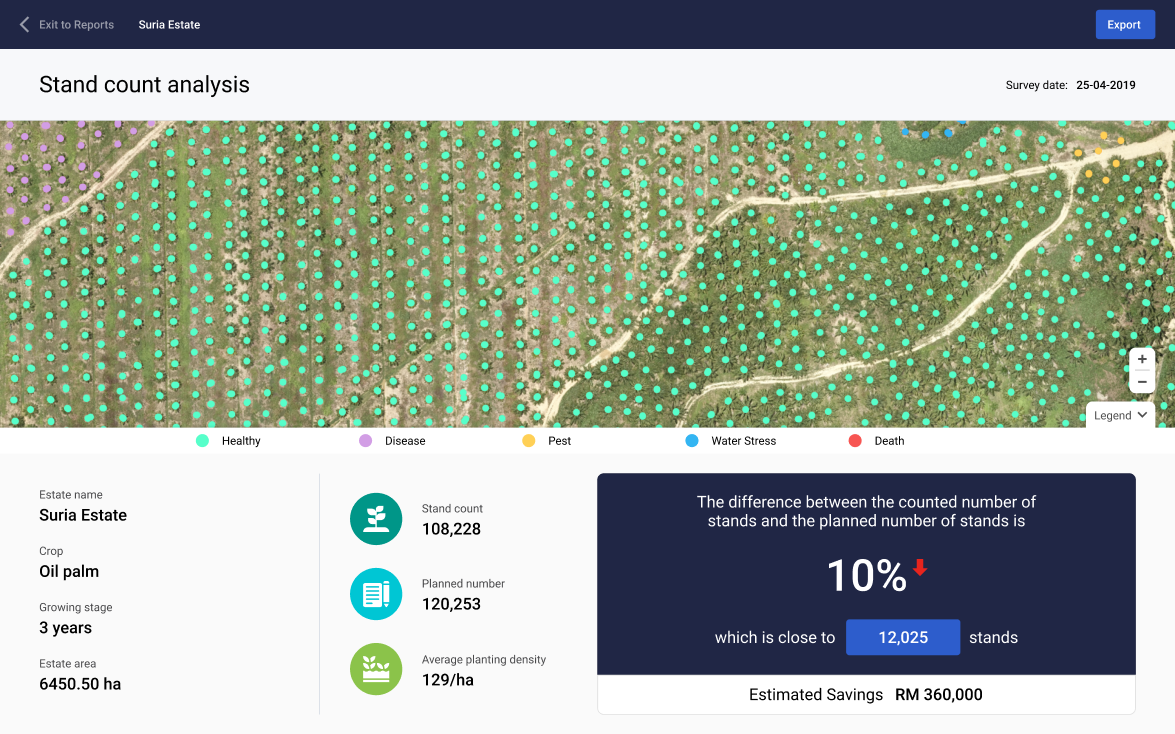

Garuda Robotics originally started embarking on computer vision work to automate and speed up the process of analyzing aerial imagery of palm-oil plantations in Malaysia and Indonesia.

We trained a model that counted trees for palm oil estates that are typically over 2,000 hectares in size. Our latest version has an accuracy rate of over 95%, whilst cutting down data processing time of a 5,000-hectare plantation from days to less than an hour.

Our agriculture business also identifies other crops, helping those farmers build a tree database that’s intricately linked to our in-house plantation AI software, Plantation 4.0. This all-in-one platform helps plantation owners perform inventory checks of their tree assets, demarcate blocks of interest, and identify in near-real-time trees that are sick and require further care.

2. Security and Surveillance: People / Car Counting

People and objects (eg. car) counting in aerial footages are especially challenging to solve as compared to its land-based application counterparts.

First, we need a sizable amount of annotated footage from drone flights. All deep learning neural net algorithms require good manually labelled data to train the model, and some more to test its accuracy.

Training a model on drone footage requires a larger dataset when compared to conventional image recognition pipelines, due to the range of payloads and operating conditions, such as time of day, lighting conditions, and flight altitude. All these factors can greatly influence image profiles. For example, a person looks very different from the front, obliquely from a drone, and when viewed top down.

Case Study: Face Counting

For example, in the use case where we counted faces in a crowd, we augmented the data captured from the sky to replicate eye-level faces as close as possible. This included modifying the detector model that prioritizes on recognizing the top part of the head.

We worked with extremely small faces — as tiny as 10 by 10 pixel boxes — on each frame. We needed to prioritize learning on the general geometry of face outlines, instead of the embedding of multiple facial features (eyes, noses, mouth, ears), before deciding whether each anchor box is statistically significant to be classified as a face.

One side-effect of such optimisation was to yield faster compute rate, processing at up to 40 frames per second. This has a significant impact on our security customers, who will need to put these algorithms to work on large crowds, but utilizing on-the-edge light processes mounted on our drones.

3. Facilities & Infrastructure

In Facilities and infrastructure management, traditional methods of inspections are often time labor-intensive and time consuming. Data collection is harder when the infrastructure is complex and relatively inaccessible by human inspectors.

Case Study: Defects Risk Assessment

We have recently developed an end-to-end inspection solution that captures aerial shots of building facades and performs an automated defects risk assessment (eg. facade cracks, corrosion). Our defects assessment combines a multi-step process to maximize accuracy while not compromising on processing time and privacy.

First, we pass through every aerial inspection footage into a censorship model which removes personally identifiable features on a scene (eg. persons, windows, car plates). To further ensure that our system is guarded against privacy violation, we use only open-sourced datasets for the development of the model.

Subsequent downstream processes classify a dozen defects as specified by government bodies such as BCA, including rust, cracks, peeled paint, cracked glass, and so on. We found that, given the repetitive nature of many of our surfaces, the detectors can get very good for the same fixed set of images (eg. for all HDB flats), but the issue is of overfitting if we needed the model to generalise.

Case Study: Obstacle Detection On-Board

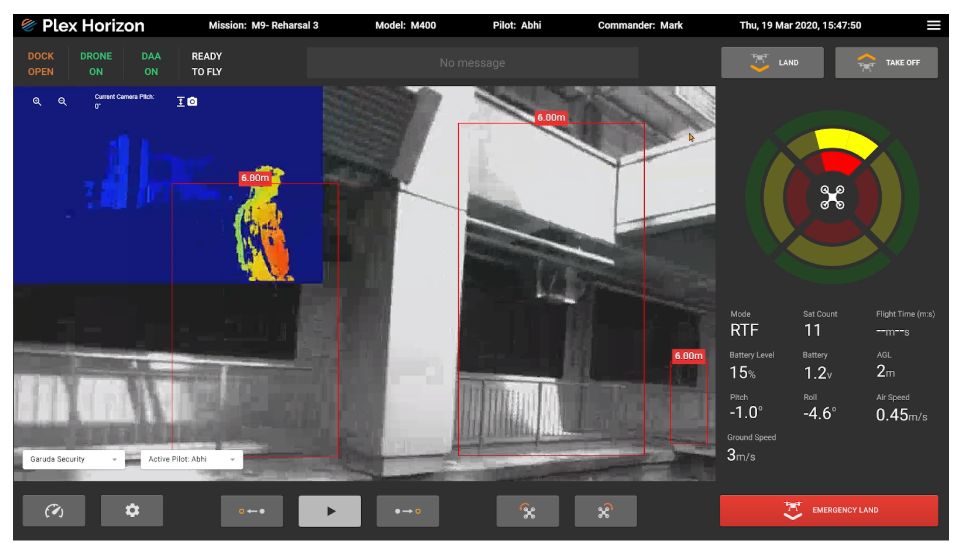

We also need an obstacle avoidance system on-board to avoid being navigated towards a wall while inspecting the building (due to GPS drift, or pilot error).

We have evaluated a number of obstacle avoidance techniques on-board the drone, such as monocular SLAM, LiDAR / IR depth sensors, blob detection, and even trained a monkey pilot at our Drone Operations Center to watch the live video feed and jam the brakes. We have now mostly settled on utilising stereoscopic cameras to build disparity maps as our primary DAA mechanism.

The resulting discrepancy maps shown in the figure below represent objects at different distances to the drone. Both the pilot and the autopilot use this information to identify which of these regions of interest require immediate attention, is in the drone’s line of trajectory, and which failsafe mechanism it has to activate immediately to keep the drone safe.

Privacy Challenge in Computer Vision

Our journey in computer vision isn’t without hurdles, one of which was the PDPA ruling in Singapore, which does not allow us to collect personally identifiable information, even if it’s just for training models, as our models are made commercially available to our customers.

After the model is trained, again PDPA needs to be applied when the algorithm is deployed, as we need to help our customers deliberately mask away all personally identifiable information, before the detection can be done. This is especially true in the inspection world where most of our operations are held in public places such as residential and industrial areas, with identifiable but private features unknowingly being fed into our detectors.

We cannot neglect the importance of privacy when developing any machine learning based solution.

Our Computer Vision Roadmap

There’s no silver bullet to solving deep learning computer vision problems. Each challenge is a tradeoff between slow with high accuracy, and fast with low accuracy. There’s a threshold of minimal acceptable speed or accuracy depending on the use case. For example, search and rescue operations have higher tolerance for false positives in finding survivors, while façade crack detection can live with a few false negatives in the analysis.

We will be launching a data science collaborator programme for selected partners to integrate their deep learning computer vision work into our platform. We will continue to handle the hard part of flying, ensuring the flight is legal, efficient, and repeatable, while our partners can attempt to parse our footage using their respective models.

Do contact us for more details if you or your company works on deep learning computer vision algorithms and would like to solve the drone footage challenge with us.

Summary

Understanding each use case and their needs is important in building the right solution for drone computer vision. We pride ourselves in bringing the latest models and engineering work into our products to address our customer’s needs, and we hope you can utilize our products to meet your business challenges.